How Does Artificial Intelligence Influence Government?

Artificial intelligence can empower authoritarians but also support democracies.

What interrupts your morning commute? Coffee runs? Traffic jams? Maybe train delays?

What about artificial intelligence (AI)?

For residents of China’s Xinjiang Province, heading out into the world can involve interacting with artificial intelligence at security checkpoints. There, officials scan residents’ IDs, take their photos, and install monitoring software on their phones.

The Chinese Communist Party (CCP) claims that high-tech surveillance keeps the province safe from violence and extremism. Chinese AI software can flag potential enemies of the state. But activists say it’s just one part of an overarching system to subjugate China’s Uyghur population. The CCP suspects this Muslim, Turkic-speaking minority ethnic group of harboring extremist and separatist ideas.

As technology advances, authoritarian governments are deploying increasingly sophisticated tools to track citizens. Advanced technology can monitor citizen behavior, and crush political dissent. But experts say that, as a technological tool, AI can both repress and protect citizens—and even strengthen democracy.

This resource will explore how AI empowers the CCP and examine the potential consequences the new form of technology could have for citizens around the world.

What Is Artificial Intelligence?

AI refers to a computer’s ability to perform tasks that humans have historically done. That technology is also capable of learning and adapting to new situations on its own. AI can operate without following explicit instructions from users.

AI is meant to collect data faster and in far greater quantities than humans can. Its ultimate goal is to improve human decision-making, which is often subject to bias and error. As cars increasingly automate, for example, accidents have become less common. Just think about how vehicles with AI-assisted automatic braking can avoid rear-end collisions.

Whether you know it or not, we interact with AI all the time. Social media platforms use AI to send targeted advertisements, and smart home devices can learn the owner’s preferences for how warm or cool to keep the house. Meanwhile, people playing chess online often face off against AI opponents—and lose. Robots have been defeating top-ranked chess players since 1997.

Artificial Intelligence in Xinjiang

In Xinjiang, China, on the other hand, the CCP uses artificial intelligence to control the province’s population.

Although the region has largely been under Chinese rule for hundreds of years, it has a different cultural history from the rest of the country. To this day, some of its residents wish to declare independence.

Uyghurs in Xinjiang have long chafed under discriminatory CCP policies that deny them economic opportunities. But after demonstrations in the province’s capital, Urumqi, led to the deaths of around two hundred people in 2009, the CCP began planning for a severe crackdown. Starting in 2017, the government began rounding up and interning more than one million mostly Uyghur Muslims in state-run camps. At those facilities, prisoners are regularly tortured. Meanwhile, Uyghurs living outside the camps have experienced surveillance, forced labor, and sterilizations.

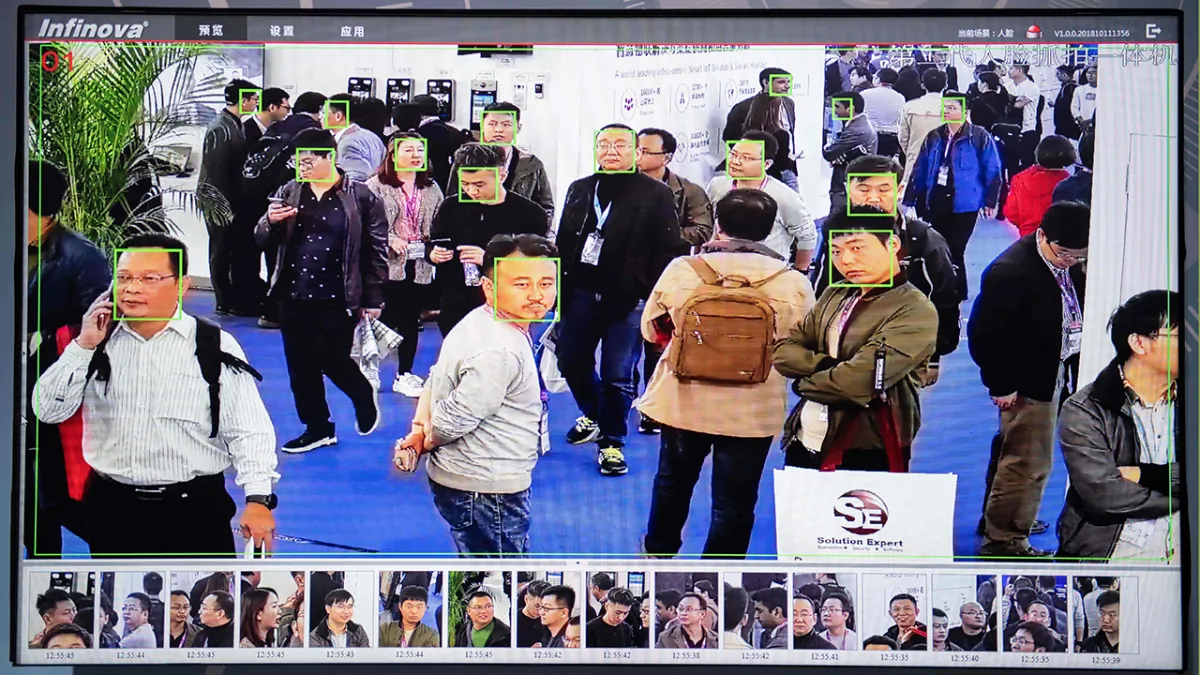

Across Xinjiang, AI-powered facial recognition technology tracks people’s movements and collects voice samples and even DNA. That information feeds into an algorithm the government uses to generate a “social score” for each citizen. That score ranks residents based on their perceived risk to the CCP. This metric has also determined whether individuals should be sent to reeducation camps or allowed to remain at home.

In recent years, both the United States and the United Nations have declared the Chinese government’s actions as serious human rights violations that could constitute genocide and crimes against humanity. That has not deterred the CCP, however, from using technology to consolidate its authoritarian grip on power.

The CCP is now an international leader in AI investment—which activists say will have global implications. Reports indicate the CCP, largely through its Belt and Road Initiative, is now exporting AI and surveillance technology to countries including Angola, Ethiopia, Pakistan, Venezuela, and Zimbabwe. Such technology has allowed governments to monitor activists, journalists, lawyers, and other perceived political opponents. The export of advanced surveillance technology allows governments to eavesdrop on phone calls, read text messages, track an individual’s location, and record nearby conversations—all in total secrecy.

How Can Artificial Intelligence Support Democracy?

Despite the risks artificial intelligence poses, advocates say the technology can also protect citizens and strengthen democracy. For example, machine learning—AI’s ability to learn by itself—can help computers improve their security systems. This development makes it more difficult for hackers to influence elections.

Additionally, AI can verify signatures on mail-in ballots, which have been critical vehicles for voting throughout the COVID-19 pandemic. AI is also being used to combat disinformation, including by detecting and stopping deep-fake videos—essentially highly realistic video forgeries.

Will Countries Develop AI Policies That Uphold Democratic Values?

In recent years, governments and international organizations have debated the ethical development of AI technology.

The Organization for Economic Cooperation and Development (OECD) has played a leading role in such conversations. Founded in 1961, the OECD includes many of the world’s most powerful economies and democracies. The organization designs international standards and guidelines on various social, economic, and environmental issues in pursuit of a more prosperous and equitable world.

In 2019, the organization created a robust set of values-based principles and recommendations for policymakers to ensure that AI development respects human rights and democratic values.

Let’s explore those principles and recommendations.

Values-based principles

- Inclusive growth, sustainable development, and well-being: AI should promote prosperity for all by focusing on reducing existing inequalities and working to slow climate change.

- Human-centered values and fairness: AI developers should include safeguards to ensure their technology respects human rights and democratic values.

- Transparency and explainability: The public should be aware of advances in AI technology, and governments should foster a better understanding of AI.

- Robustness, security, and safety: Governments and companies using AI should ensure their technology is secure and traceable.

- Accountability: Governments using AI should be accountable to the public.

Recommendations for policymakers

- Investing in AI research and development: Governments should organize public and private investment in AI to ensure the technology’s responsible development.

- Fostering a digital ecosystem for AI: Governments should support the development of the digital infrastructure, including affordable high-speed broadband networks, to support the growth of AI.

- Providing an enabling policy environment for AI: Governments should create clear laws and policies that support responsible AI development.

- Building human capacity and preparing for labor market transition: As AI transforms many professions, governments should help workers adapt through job training programs and related services.

- International cooperation for trustworthy AI: Governments should collaborate to share information and create international standards for the responsible development of AI.

However, just because countries are OECD members—or have agreed to similar principles—does not mean they will necessarily adhere to those guidelines. Ultimately, such principles are, at most, recommendations with few avenues for actual enforcement.

For every country that uses AI to strengthen democracy, others will likely use it to surveil their people. Ultimately, whether the technology is a greater force for strengthening or subverting democratic institutions remains to be seen.