How Did Humans Come to Live Longer and Healthier Lives?

Explore centuries of innovations that have revolutionized health care.

With global life expectancy approaching seventy-three years, the average person has a good shot at making it to old age. That’s more than our ancestors could say for centuries, and it’s due in large part to major developments in the ways we take care of ourselves.

If you grew up in the United States, odds are you’ve been vaccinated against deadly diseases. You can most likely drink water straight from the faucet. You probably have a health-care provider to visit when you’re sick and can go to one of over six thousand hospitals across the country in emergencies. With all these interventions and more, you can expect to live well into your seventies.

This wasn’t always the case. In 1800, for example, global life expectancy was estimated to be just twenty-nine years. This didn’t mean people died on their thirtieth birthdays; rather, low life expectancy reflected the large number of people at the time who died young. More than one-third of children died before their fifth birthday, and most young women knew at least one person who had died in childbirth. But if someone back then made it through the perils of youth, the idea of that person living seventy years or more was not unfathomable.

How did humans on average begin to live longer and, in many ways, healthier lives?

The answer lies in the history of health care. This lesson explores eight developments and discoveries that have made our modern health-care system what it is today and helped double the average length of our lives.

How did we learn about germs?

Until the late nineteenth century, people commonly believed they caught diseases by breathing noxious, smelly air. Throughout Europe’s various plagues, people tried to protect themselves by breathing through bunches of flowers.

But several experts in the mid-nineteenth century helped the world find the true culprit for many illnesses: tiny particles called germs. One of those scientists was Dr. John Snow.

In 1854, Snow set out to find the source of a deadly cholera outbreak in London’s Soho neighborhood. He created a map plotting where victims of the disease lived and found that many resided close to a water pump Snow feared was contaminated. He convinced British officials to remove the pump’s handle, and the outbreak ceased. Later, it came to light that the diapers of a cholera-infected baby had been thrown away near the water source, contaminating it with disease-causing germs and kicking off the epidemic.

It took time and further research from scientists like Robert Koch and Louis Pasteur for germ theory to be widely accepted. But the idea that tiny organisms cause and spread disease eventually revolutionized health care. Today, germ theory helps us understand how COVID-19 spreads from one person to another.

How did we get modern surgery?

Humans have performed surgeries for millennia. But only recently did the odds of surviving surgery begin to eclipse the odds of dying from it. In the early nineteenth century, these procedures were horrifically painful without modern anesthesia and highly dangerous due to the risk of infection.

The work of battling infections got a major boost in the 1860s from Joseph Lister, a British surgeon who applied ideas about germ theory to surgical procedures. Lister sought to kill infection-causing microorganisms with carbolic acid, which had been poured down the city drains in Durham, England, to stop them from smelling so awful.

Lister’s system of using carbolic acid on hands, instruments, and wounds helped lead to the sterile standards hospitals use today—one reason surgery isn’t the deadly coin toss it used to be.

How did we start washing our hands?

In the nineteenth century, doctors rarely washed their hands. Unfortunately, this meant they could unknowingly hand-deliver dangerous infections to their patients.

However, in the mid-nineteenth century, one doctor learned the importance of handwashing. While working at a hospital in Vienna, Ignaz Semmelweis noticed a pattern: between two maternity wards (one staffed by doctors and one by midwives), women died far more often of a dangerous illness called childbed fever in the ward run by doctors.

Semmelweis knew that doctors often delivered babies after performing autopsies—something midwives didn’t do. He suggested doctors’ hands were contaminated with cadaver particles, which were then introduced into patients, with deadly results.

To combat the problem, he implemented a hand-washing regimen, which immediately saved lives. But other doctors ignored his work, angry at the idea they could be at fault and perhaps insulted by the implication they were dirty.

Today, Semmelweis is considered a father of modern handwashing. Health experts continue to recommend this basic hygiene practice as one of the best defenses against deadly illnesses such as the flu and COVID-19.

How did we get modern sanitation?

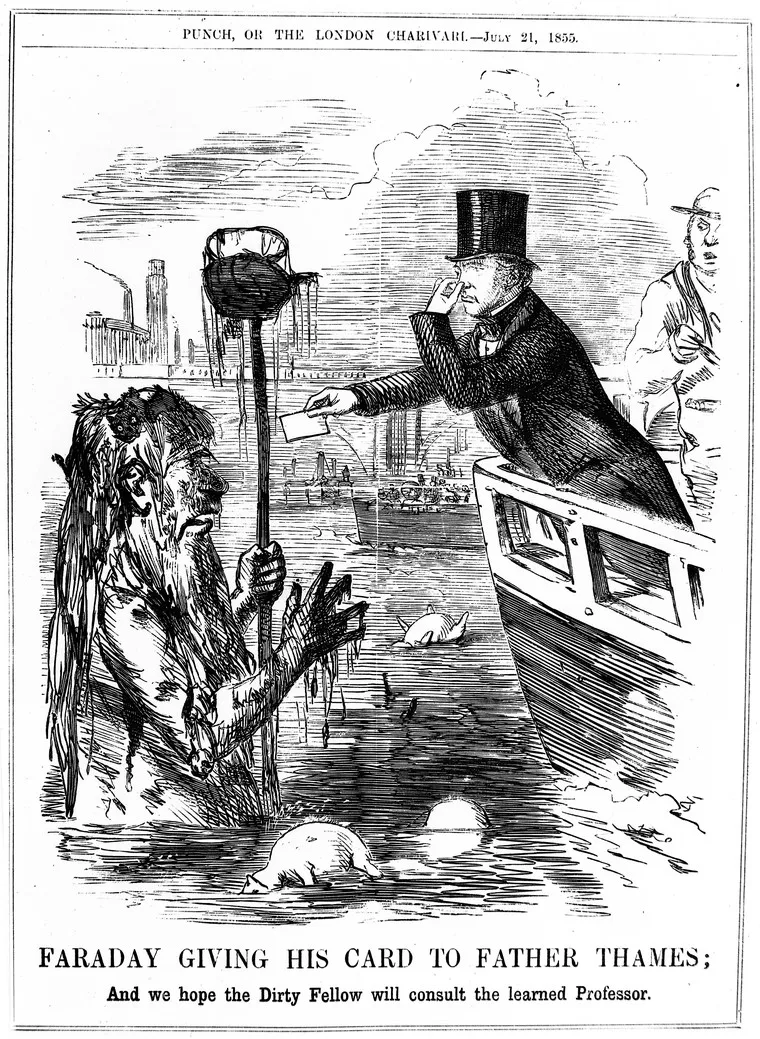

Nineteenth-century city life could be pretty gross. Although today many of us are lucky enough to have toilets in our homes to whisk away our waste through underground sewers, two hundred years ago that waste often made its way into the street. Open sewers and overwhelming amounts of garbage made major cities both dangerously unsanitary and extremely smelly. Perhaps none was smellier than London in the summer of 1858.

London’s Thames River had been the final destination for much of the city’s waste for centuries. But the volume of waste skyrocketed in the 1800s when the metropolis’s population tripled between 1800 and 1860. One particularly hot summer, the putrid odor from the Thames became so infamous, it earned the nickname the Great Stink.

With toxic odors overpowering the city—including England’s parliament, which overlooked the river—lawmakers moved quickly to overhaul the sanitation system.

By 1875, London had built over a thousand miles of drains and sewers to carry away the city’s waste—a landmark moment in what was later called the sanitary revolution. But today, more than half the world still lacks access to safe sanitation, heightening the risk of illnesses like diarrhea, which kills more than two thousand children per day.

How did we come up with vaccines?

Although today the world’s leading causes of death are heart disease and stroke—conditions known as noncommunicable diseases, which are not contagious—dying of infectious disease used to be far more common.

Scientists have made significant advances on two fronts in the battle against infectious disease: they have developed vaccines and innovated successful treatments that make surviving deadly illnesses more likely.

Vaccines save 2.5 million lives per year. They are also one reason that far fewer of us die from diseases like smallpox and polio.

Though these shots in the arm could seem like new technology, the truth is that people have been working toward disease immunity for centuries. One thousand years ago, people tried to prevent smallpox in China by blowing ground-up smallpox scabs up people’s noses. In colonial America, enslaved Africans helped introduce the idea of inoculation to the New World, exposing individuals to small doses of smallpox in order to provoke an immune response.

Vaccines entered a new era with the work of British doctor Edward Jenner. He became obsessed with the idea that a bout with cowpox—a mild disease transmitted from cows to people—prevented people from getting smallpox. His peers even labeled him the “cowpox bore” because he discussed it so often. In 1796, he introduced cowpox pus into a cut on an eight-year-old boy’s arm and later exposed him to smallpox. The boy didn’t get sick, and this new process of vaccination was named to reflect the Latin word for cows (vacca).

Vaccines remain a crucial weapon in the fight against infectious diseases such as COVID-19, hepatitis, and polio.

How did we learn to treat infectious disease?

Despite the ongoing threat posed by infectious diseases such as COVID-19, it’s clear the world has made tremendous progress in treating many contagious illnesses. Take malaria, for example.

Malaria used to threaten more than half the globe. But recent years have seen steep declines in the number of deaths from this disease—and one reason is medicine.

Modern treatments for malaria have centuries-old roots. One crucial medicine to fight malaria comes from the bark of a South American tree. Different accounts attribute the discovery of the bark’s medicinal properties to indigenous peoples, Jesuit missionaries, and even a Spanish countess, whose fever (according to legend) the tree’s bark cured. In 1820, two French scientists extracted a medicine from the bark that, according to Winston Churchill, “saved more Englishmen’s lives and minds than all the doctors in the Empire.”

Another treatment derived from ancient practices has recently become an even more effective tool for treating malaria. The fourth-century Chinese doctor Ge Hong described using an herb called sweet wormwood to treat fevers. This helped guide the research of Tu Youyou, who won the Nobel Prize in 2015 for her work toward developing today’s most effective malaria drugs.

How did we start using quarantines to stop the spread of disease?

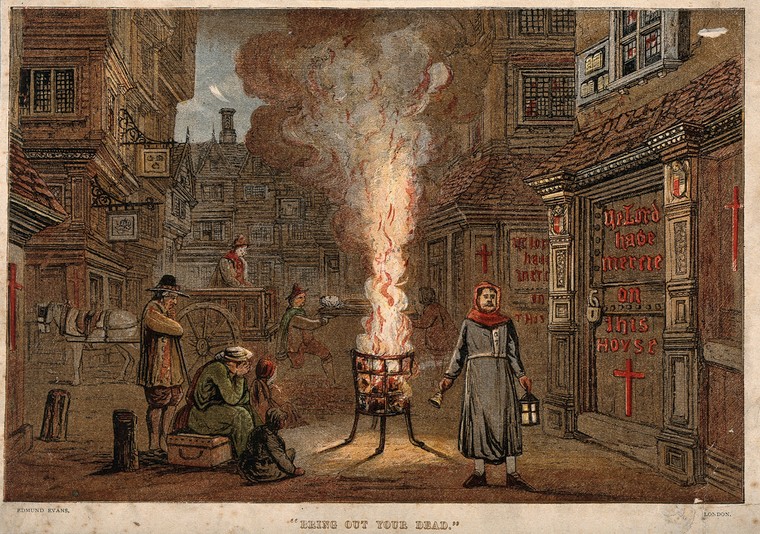

The practice of quarantining is so old that the Bible recommends it to protect people against the spread of leprosy and other diseases. But the term came to prominence in the fourteenth century during one of the worst disease outbreaks the world had ever seen: the Black Death.

This plague killed tens of millions of people. As it ravaged the continent, people sought to protect themselves. One Italian noble demanded infected people be tossed from town to live or die in nearby fields. Meanwhile, others tried to prevent the disease from entering their cities through quarantines. New rules demanded ships and visitors isolate themselves for forty days—or quaranta giorni in Italian, from which the modern term derives.

The age-old practice has taken on new relevance in the era of COVID-19. When the contagious disease ramped up its spread in 2020, officials enacted widespread lockdowns that may have saved millions of lives.

How did we start cooperating globally on public health?

The rise of quarantines also helped introduce the idea of international cooperation on global health.

In the nineteenth century, Europe was struggling to contain the spread of cholera. Countries across the continent had varying rules for how ships arriving from abroad had to quarantine to avoid spreading the disease, leading to costly delays and goods spoiling.

In 1851, delegates from several European countries convened in Paris for the first International Sanitary Conference, which intended to reconcile these quarantine rules. Though it took years for concrete measures to emerge from the meetings that followed, the conference has been hailed as a milestone in the history of international health cooperation and served as an important precursor to the World Health Organization (WHO).

Today, the WHO provides equipment, resources, and guidance to nongovernmental organizations and national health agencies around the world. Its work includes coordinating responses to international health emergencies, combating the scourge of noncommunicable diseases, and organizing global immunization campaigns. Its crusade against smallpox, which killed an estimated three hundred million people in the twentieth century, helped eradicate the disease in 1980. However, the agency has struggled to mount an effective response to the global coronavirus pandemic and has faced criticism for praising China’s handling of the crisis, despite evidence that Chinese officials delayed disease reporting, undercounted cases, and silenced whistleblowers.

Uneven Outcomes

Today, fewer people are dying young and more are making it to their golden years—leading to an average global life expectancy more than double that of our ancestors.

But global averages can obscure important differences between regions and countries. The citizens of high-income Japan, for example, can expect to live more than three decades longer than those in the low-income Central African Republic.

This gap also exists between communities in the same country—even in the same city. In Baltimore in 2015, for instance, one wealthy neighborhood’s average life expectancy was nineteen years higher than that of a poorer community just three miles away. Life expectancy for Black and indigenous Americans has long trailed national averages, reflecting the health effects of generations of discriminatory laws, customs, and institutions—also known as systemic racism.

Divergent outcomes on both a global and local scale have become obvious in the era of COVID-19. The United States makes up just 4 percent of the world’s population but comprises around 20 percent of global fatalities from the virus. As of January 2021, more than four hundred thousand Americans have died from COVID-19. Black and Hispanic/Latinx Americans have perished at nearly three times the rate of white Americans, reflecting complex issues in the country relating to racism, access to health care, and rates of preexisting conditions.

And while all eyes are trained on the coronavirus crisis, noncommunicable diseases remain leading killers in society. Conditions like obesity, high blood pressure, and diabetes have disparate effects on many communities of color in the United States. For example, the death rate for Hispanic/Latinx Americans from diabetes is 50 percent higher than for white Americans.

Health care has come a long way over the years through developments in hygiene, sanitation, and vaccines, but much more needs to be done in order for all people to benefit from these gains equally.